Posts Tagged probability

Patriots make a mockery of 249 to 1 odds against them

Check out this Super Bowl win probability chart by ESPN Stats & Info. It remains bottomed out at an Atlanta Falcons victory from halftime on to the end of regulation, after which the Patriots ultimately prevail. When New England settled for a field goal to cut their deficit to 16 points (28-to-12), the ESPN algorithm registered a 0.4% probability for them to win, being 9 minutes and 44 seconds left in the game. That computes to 249 to 1 odds against a Patriot victory. Ha!

I am not terribly surprised that a team could overcome such odds. The reason is that on December 29, 2006 I attended the Insight bowl in Tempe, Arizona where the Red Raiders of Texas, after falling behind 38-7 with 7:47 remaining in the third quarter, rallied to score 31 unanswered points and ultimately defeat my Gophers in overtime. At the time it was the greatest comeback of all time in a bowl game, matched only after another decade passed with the 2016 Alamo Bowl victory by the TCU Horned Frogs, who trailed the Oregon Ducks 0-31 at halftime. But they had more time than the Gophers to throw away their sure victory. I entered our 2006 chances of victory in this football win probability calculator. It says 100.00% that Minnesota must win. Ha!

The laws of probability, so true in general, so fallacious in particular.

– Edward Gibbon

Lottomania revving up with Powerball pot approaching a half billion dollars

Despite odds of only 1 in nearly 300 million for a win, Americans are lining up to buy tickets for a chance at the ninth largest jackpot in U.S. history. Why bother? Evidently most of us, e.g., my wife,* suffer from “availability bias”. This occurs due to the diabolical way that lottery officials publicize winners, which when magnified by the media, makes is seem that these windfalls are commonplace.

Another fallacy, which tricks analytical types like me, is assuming that expected value becomes positive when the jackpot builds. That is, for every $1 invested, more than that is likely to be returned, at least on average. The flaw in this calculation is that lottomaniacs swarm on the big pots, thus making it very likely that the payoff must then be shared among multiple winners. For details, follow this thread on XKCD’s forum.

*I asked her “If you won the lottery, would you still love me?’” and she said: “Of course I would. I’d miss you, but I’d still love you.” (Credit goes Irish comedian Frank Carson for this witty comeback.)

Picking on P in these times of measles

Posted by mark in science, Uncategorized on February 3, 2015

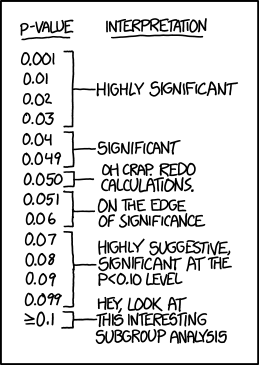

Randall Munroe takes a poke at over-valuers of p in this XKCD cartoon

Nature weighed in with their shots against scientists who misuse P values in this February 2014 article by statistics professor Regina Nuzzo. She bemoans the data dredgers who come up with attention-getting counterintuitive results using the widely-accepted 0.05 P filter on long-shot hypotheses. A prime example is the finding by three University of Virginia finding that moderates literally perceived the shades of gray more accurately than extremists on the left and right (P=0.01). As they admirably admitted in this follow up report on Restructuring Incentives and Practices to Promote Truth Over Publishability, this controversial effect evaporated upon replication. This chart on probable cause reveals that these significance chasers produce results with a false-positive rate of near 90%!

Nuzzo lays out a number of proposals to put a damper on overly-confident reports on purported scientific studies. I like the preregistered replication standard developed by Andrew Gelman of Columbia University, which he noted in this article on The Statistical Crisis in Science in the November-December issue of American Scientist. This leaves scientists free to pursue potential breakthroughs at early stages when data remain sketchy, while subjecting them to rigorous standards further on—prior to publication.

“The irony is that when UK statistician Ronald Fisher introduced the P value in the 1920s, he did not mean it to be a definitive test. He intended it simply as an informal way to judge whether evidence was significant in the old-fashioned sense: worthy of a second look.”

High rollers beat the lottery odds

Posted by mark in Consumer behavior on April 2, 2012

The $640 million jackpot in the Mega Millions lottery Friday night created a huge buzz. Unfortunately this fizzled out for all but the three big winners in Illinois, Kansas, and Maryland. Even we analytical types get swept up in the frenzy, seeing as how the money sunk in prior drawings brings the expected value over 100 percent. Yes, the odds of 1 in 176,000,000 remain daunting, but it sure is fun to have a few numbers to play with.

The really gutsy lottery wonks focus on other games where situations arise that make huge bets nearly a sure thing. For example, see this Boston Globe heads-up on “a game with a windfall for a knowing few”. Imagine showing up at your corner gas station with $100,000s in cash for lottery tickets—that would be a great day for the own, especially given the seller earns a commission that can grow to $100,000 for some jackpots.

“Bettors like the Selbees, who spent at least $500,000 on the game, had almost no risk of losing money.”

– Mark Kon, a professor of math and statistics at Boston University

Strategy of experimentation: Break it into a series of smaller stages

Posted by mark in design of experiments on June 20, 2011

Tia Ghose of The Scientist provides a thought-provoking “Q&A” with biostatistician Peter Bacchetti on “Why small is beautiful” in her June 15th column seen here. Peter’s message is that you can learn from a small study even though it may not provide the holy grail of at least 80 percent power.* The rule-of-thumb I worked from as a process development engineer is not to put more than 25% of your budget into the first experiment, thus allowing the chance to adapt as you work through the project (or abandon it altogether). Furthermore, a good strategy of experimentation is to proceed in three stages:

- Screening the vital few factors (typically 20%) from the trivial many (80%)

- Characterizing main effects and interactions

- Optimizing (typically via response surface methods).

For a great overview of this “SCO” path for successful design of experiments (DOE) see this detailing on “Implementing Quality by Design” by Ronald D. Snee in Pharm Pro Magazine, March 23, 2010.

Of course, at the very end, one must not overlook one last step: confirmation and/or verification.

* I am loathe to abandon the 80% power “rule”** but, rather, increase the size of effect that you screen for in the first stage, that is, do not use too fine a mesh.

** For a primer on power in the context of industrial experimentation via two-level factorial design, see these webinar slides posted by Stat-Ease.

Simple and cheap safety precautions against the small risk of drowning in an automobile

I always thought that if I was in a car that went into water, I’d be cool enough to roll down the windows, or wait until it submerges before opening the door (otherwise the pressure differential makes it impossible). Based on actual experimentation, the hosts of the television show Mythbusters felt the same way, that is, until viewers pointed out that many cars turn turtle as they sink. So in a show I watched last month they [Jamie Hyneman and Adam Savage] tried this. It was a disaster! The Mythbuster driver [Adam] survived only by breathing from an emergency air source, and the safety diver had to cut his way out of a seat belt that wouldn’t release! See this recap to learn what went wrong. What they don’t show is how at first the car just floated, so it seemed like no big deal; but then when it sank, the automobile went down incredibly fast. En route to the bottom of the lake the car spun around so much that the occupants would’ve drowned for sure. Scary!

I always thought that if I was in a car that went into water, I’d be cool enough to roll down the windows, or wait until it submerges before opening the door (otherwise the pressure differential makes it impossible). Based on actual experimentation, the hosts of the television show Mythbusters felt the same way, that is, until viewers pointed out that many cars turn turtle as they sink. So in a show I watched last month they [Jamie Hyneman and Adam Savage] tried this. It was a disaster! The Mythbuster driver [Adam] survived only by breathing from an emergency air source, and the safety diver had to cut his way out of a seat belt that wouldn’t release! See this recap to learn what went wrong. What they don’t show is how at first the car just floated, so it seemed like no big deal; but then when it sank, the automobile went down incredibly fast. En route to the bottom of the lake the car spun around so much that the occupants would’ve drowned for sure. Scary!

After this epiphany, I ordered several of these inexpensive (<$5) safety hammers (see one pictured) for cars owned by me and my offspring.

Check out this post by First Aid Monster for another video showing how fast you can go underwater when a car runs into a canal, river, pond, lake or ocean. They suggest buying a safety hammer and provide a link to one similar to what I bought.

When I advised family and co-workers to be prepared for being trapped in a car that goes underwater, it was met by a few with great skepticism.

One individual wondered how many people die this way, figuring it being so unlikely as to not be worth any worries. From internet research, the best I can figure is that about 10% of all drowning occur in submerged cars. Then using statistics from this graphic by the National Safety Council putting the odds of death by drowning at 1 in 1000, I figure that dying this way in a car occurs at about a 1 in 10,000 rate – somewhat less likely than dying in a plane crash. I’ve flown hundreds of times and never yet come across anyone refusing to buckle up as required when taking off and landing. Why not?

Another person expressed strong doubts as to whether the hammers could break an automobile window. I cannot yet say from first-hand experimentation, but this video provides convincing evidence, I feel.

Anyways, I’m putting the little <$5 safety hammers in all my cars. Why not? It could come in handy some day, if not for me to escape a submerged car, then maybe for some other event that requires breaking glass – someone trapped in a car crash on land, for example.

Bear in mind that I am a Minnesotan — a state that boasts of having 10,000 lakes and where a bridge fell down into the Mississippi not that long ago. Furthermore, I live in a town (Stillwater) with a rotten old lift bridge that may be the next to fail according to this recent report by The History Channel.

Quantifying statements of confidence: Is anything “iron clad”?

Posted by mark in Basic stats & math, Uncategorized on August 19, 2010

Today’s “daily” emailed by The Scientist features a heads-up on “John Snow’s Grand Experiment of 1855” that his pioneering epidemiology on cholera may not be as “iron clad” as originally thought. A commentator questions what “iron clad” means in statistical terms.

It seems to me that someone ought to develop a numerical confidence scale along these lines. For example:

- 100% Certain.

- 99.9% Iron clad.

- 99% Beyond a shadow of a doubt.

- 95% Unequivocal.

- 90% Definitive.

- 80% Clear and convincing evidence.

- 50% On the balance of probabilities.

There are many other words used to convey a level of confidence, such as: clear-cut, definitive, unambiguous, conclusive. How do these differ in degree?

Of course much depends on how is making such a statement, many of whom are not always right, but never in doubt. ; ) I’m skeptical of any assertion, thus I follow the advice of famed statistician W. Edwards Deming:

“In God we trust, all others bring data.”

Statistics can be very helpful for stating any conclusion because it allows one to never have to say you are certain. But are you sure enough to say it’s “iron clad” or what?

Making random decisions on the basis of a coin flip

Posted by mark in Basic stats & math on August 28, 2009

I watched the movie Leatherheads last night – a comedic tribute to the early days of professional football. It re-created the first coin flip used to determine which team would kick off. The referee allowed the coin to fall to the ground – introducing a bit more randomness into the outcome (as opposed him catching it). That’s one of the findings presented in the Dynamical Bias in the Coin Toss by a trio of mathematics and statistics professors from Stanford and UC Santa Cruz. Surprisingly, they report that for “natural flips” the chance of a coin coming up as started is 51 percent. In other words, this procedure for creating an even probability is biased by the physics.

My “heads up” (ha ha) on this came from a former colleague of mine. He sent me a link that led me to this flippant (pun-intended) summary by blogger James Devlin . Devlin warns against spinning a coin to create a 50/50 outcome – a heavy-headed coin can fall tails-up as much as 80 percent of the time! It seems to me that this approach would also increase the odds of a flipistic singularity – normally very rare (1 in 6000 chance).

Another colleague, who once collected comics about Donald Duck, told me the tale of flipism – a random way to live life. However, I think I will not go down this road, but rather quit this blog while I am still ahead.

“Life is but a gamble! Let Flipism chart your ramble.”

– Slogan in Flip Decision by Carl Barks

PS. A fellow trainer starts off statistics workshops with a fun icebreaker that gets students involved with flipping a coin. He asks the class what they expect for an outcome and then challenges this assumption experimentally. The first student gets heads, which the trainer tallies on a flipchart. Each student in turn gets the same outcome until someone finally gets suspicious and discovers that it’s a two-headed quarter.

Overreacting to patterns generated at random – Part 2

Professor Gary Oehlert provided this heads-up as a postscript on this topic:

“You might want to look at Diaconsis, Persi, and Fredrick Moesteller, 1989, “Methods for Studying Coincidences” in the Journal of the American Statistical Association, 84:853-61. If you don’t already know, Persi was a professional magician for years before he went back to school (he ran away from the circus to go to school). He is now at Stanford, but he was at Harvard for several years before that.”

I found an interesting writeup on Percy Diaconis and a bedazzling photo of him at Wikipedia. The article by him and Moesteller notes that “Coincidences abound in everyday life. They delight, confound, and amaze us. They are disturbing and annoying. Coincidences can point to new discoveries. They can alter the course of our lives; where we work and at what, whom we live with, and other basic features of daily existence often seem to rest on coincidence.”

However, they conclude that “Once we set aside coincidences having apparent causes, four principles account for large numbers of remaining coincidences: hidden cause; psychology, including memory and perception; multiplicity of endpoints, including the counting of “close” or nearly alike events as if they were identical; and the law of truly large numbers, which says that when enormous numbers of events and people and their interactions cumulate over time, almost any outrageous event is bound to occur. These sources account for much of the force of synchronicity.”

I agree with this skeptical point of view as evidenced by my writing in the May 2004 edition of the Stat-Ease “DOE FAQ Alert” on Littlewood’s Law of Miracles, which prompted Freeman Dyson to say “The paradoxical feature of the laws of probability is that they make unlikely events happen unexpectedly often.“

Overreacting to patterns generated at random — Part 1

My colleague Pat Whitcomb passed along the book Freakonomics to me earlier this month. I read a story there about how Steven D. Levitt, the U Chicago economist featured by the book, used statistical analysis To Catch a Cheat –teachers who improved their students’ answers on a multiple-choice skills assessment (Iowa Test). The book provides evidence in the form of an obvious repeating of certain segments in otherwise apparently-random answer patterns from presumably clueless students.

Coincidentally, the next morning after I read this, Pat told me he discovered a ‘mistake’ in our DX7 user guide by not displaying subplot factor C (Temp) in random run order. The data are on page 12 of this Design-Expert software tutorial on design and analysis of split plots. They begin with 275, 250, 200, 225 and 275, 250, 200, 225 in the first two groupings. Four out the remaining six grouping start with 275. Therefore, at first glance of this number series, I could not disagree with Pat’s contention, but upon further inspection it became clear that the numbers are not orderly. On the other hand, are they truly random? I thought not. My hunch was that the original experimenter simply ordered numbers arbitrarily rather than using a random number generator.*

I asked Stat-Ease advisor Gary Oehlert. He says “There are 4 levels, so 4!=12 possible orders. You have done the random ordering 9 times. From these 9 you have 7 unique ones; two orders are repeated twice. The probability of no repeats is 12!/(3!*12^12). This equates to a less than .00001 probability value. Seven unique patterns, as seen in your case, is about the median number of unique orders.”

Of course, I accept Professor Oehlert’s advice that I should not concern myself with the patterns exhibited in our suspect data. One wonders how much time would be saved by mankind as a whole by worrying less over what really are chance occurrences.

*The National Institute of Standards and Technology (NIST) provides comprehensive guidelines on random number generation and testing– a vital aspect of cryptographic applications.